One of the obstacles for facial expression recognition technology is the difficulty in providing large amounts of data required to train detection models for each facial pose, because faces are usually captured with a wide variety of poses in real-world applications. To address the problem, Fujitsu has developed a technology to adapt different normalization process for each facial image. For example, when the angle of the subject's face is oblique, the technology can adjust the image to more closely resemble the frontal image of the face, allowing the detection model to be trained with a relatively small amount of data. The technology can accurately detect subtle emotional changes, including uncomfortable or nervous laughter, confusion, etc.-even when the subject's face is moving in a real-world context.

Fujitsu anticipates that the new technology will find use in a variety of real-world applications, including communication facilitation for employee engagement and to improve workplace safety for drivers and factory workers.

Background

In recent years, technologies that detect changes in facial expression from images and read human emotions have been increasingly attracting interest. Existing technologies have mainly been developed for detecting clear changes in facial expression (e.g. the corners of the mouth and the corners of the eyes moving widely). These technologies have been used in some practical applications, including automatic extraction of highlight scenes in videos and enhancing robots' reactions. In the future, facial expression recognition technologies will be more widely utilized in a variety of situations, including patient monitoring in healthcare and analysis of customers' responses to products in marketing campaigns.

Issues

In order to "read" human emotions more effectively, it's critical to capture the subtle facial changes associated with emotions like understanding, bewilderment, and stress. To accomplish this, developers have increasingly relied on Action Units (AUs), which express the "units" of movement corresponding to each muscle of the face based on an anatomically based classification system. For example, AUs have been used by professionals in fields as varied as psychological research and animation. AUs are classified into approximately 30 types based on the movements of each facial muscle, including for eyebrow and cheek movements. By integrating these AUs into its technology, Fujitsu has pioneered a new approach to detect even subtle changes in facial expression. To detect AUs with greater accuracy, large amounts of data are required by the underlying deep learning techniques. However, in real-world situations, cameras usually capture faces at various angles, sizes, and positions, making it difficult to prepare large-scale learning data corresponding to each visual/spatial state. Therefore, the camera-captured images adversely impact detection accuracy.

Developed Technologies

In collaboration with the Carnegie Mellon University School of Computer Science, Fujitsu Laboratories, Ltd. and Fujitsu Laboratories of America Inc. have developed an AI facial expression recognition technology that can detect AUs with high accuracy even with limited training data.

1. Normalization process to adjust the face for better resemblance of the frontal image

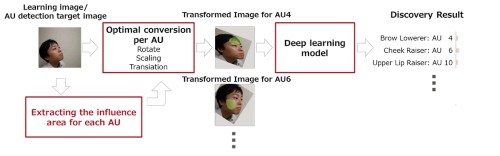

With this technology, images of the face taken at various angles, sizes, and positions are rotated, enlarged or reduced, and otherwise adjusted so that the image more closely resembles the frontal image of the face. This makes it possible to detect AUs with a small amount of training data based on the frontal view of the subject's face.

2. Analyzing significant regions that affect AU detection for each AU

In the normalization process, multiple feature points of the face in the image are converted so that they approach the positions of the feature points in the frontal image. However, the amount of rotation, enlargement/reduction, and adjustment changes depending on where the feature points are selected in the face. For example, if the feature points are selected to be around the eyes and perform the rotation process, the area around the eyes will be close to the reference image, but parts such as the mouth will be out of alignment. To tackle this issue, the areas that have a significant influence on AU detection from the captured face image are analyzed, and the degree of rotation, enlargement, and reduction get adjusted accordingly. By using different normalization process for each individual AU, the developed technology can detect AUs with greater accuracy.

Outcome

This technology has achieved a high detection accuracy rate of 81% even with limited training data. This technology is also more accurate than other existing technologies according to certain facial expression recognition technology benchmarks (Facial Expression Recognition and Analysis Challenge 2017).

Future Plans

Fujitsu aims to introduce the technology to practical applications for various use cases, including teleconferencing support, employee engagement measurement, and driver monitoring.